In the rapidly evolving e-commerce environment, it is essential to understand how to locate the most important codes for products such as UPC, ASIN, or Walmart. Sometimes barcodes represent the following product information as images or characters.

There are two main types of barcodes: There are two main types of barcodes:

1D Barcodes – These are the normal vertical checkmarks that include numbers on the downside. A scanner reads them to let you know what the product is, where from, and who manufactured it. It can be only numeric like UPC, or EAN and can also be alphanumeric like ASIN, or SKU.

2D Barcodes – grouped under this are the QR codes manufactured using squares, dots, and shapes to preserve details such as an image of the product.

This system enables all kinds of businesses to quickly get all the information about products using smartphones or scanners.

Understanding UPC, ASIN and Walmart Product Codes

UPC (Universal Product Code)

A UPC is a standardized barcode used to identify products. It consists of 12 digits and is widely used in retail environments.

Organic results: Websites that appear based on Google's algorithm's ranking of their relevance to your search query.

Components:

Manufacturer Code (Prefix): The first 6 digits are assigned to the manufacturer or brand. This code identifies the company that produces the product.

Product Code: The next 5 digits identify the specific product made by the manufacturer. This helps distinguish between different products made by the same manufacturer.

Check Digit: The final digit is a check digit used to validate the accuracy of the UPC. It ensures that the code has been scanned correctly and that there are no errors.

Representation: The UPC is often displayed as a series of black bars and white spaces on product packaging, which can be scanned using a barcode reader.

Purpose

Inventory Management: UPCs help businesses keep track of stock levels, streamline reordering processes, and manage inventory more effectively.

Sales Processing: During checkout, UPCs are scanned to quickly retrieve product information and prices, speeding up the transaction process and reducing errors.

Product Identification: UPCs ensure that the correct product is being sold and purchased, which helps in managing product data across different retailers.

Usage

Retail Environment: UPCs are used in physical stores to track products and manage sales. They are scanned at checkout counters, which helps in updating inventory and processing transactions.

Online Retail: UPCs are also used by online retailers to identify products and manage listings. They help in maintaining consistency and accuracy in product information.ASIN (Amazon Standard Identification Number)

An ASIN (Amazon Standard Identification Number) is a unique identifier used by Amazon to catalog products in its marketplace. It is an alphanumeric code, usually 10 characters long, that distinguishes each product listed on Amazon.

Structure

Format: ASINs are alphanumeric, which means they can include both letters and numbers. The code itself does not follow a specific pattern or structure outside of being unique to each product.

Uniqueness: Each product has a distinct ASIN, which helps Amazon track and manage the vast number of products available on its platform.

Length: An ASIN is typically 10 characters long.

Components: The ASIN does not have a specific structure like a UPC. It is a unique code assigned by Amazon, and its format can vary, including both letters and numbers.

Purpose

Product Cataloging: ASINs are used to organize and manage products within Amazon’s ecosystem. Each product listing on Amazon has a unique ASIN that differentiates it from other products.

Search and Discovery: Customers use ASINs to search for specific products on Amazon’s platform. It helps in locating products quickly and accurately.

Listing Management: Sellers use ASINs to create and manage their product listings on Amazon. It ensures that each product is correctly categorized and identified.

Usage

Amazon Marketplace: ASINs are specific to Amazon’s ecosystem. They are used for searching, managing, and cataloging products within Amazon’s site and services.

Product Information: Each ASIN corresponds to a product listing, which includes product details, reviews, and availability information on Amazon.

Walmart Product Codes

Walmart Product Codes are unique identifiers used by Walmart to manage and catalog products within its extensive inventory system. These codes are similar in purpose to ASINs and UPCs but are specific to Walmart.

Structure

Format: Walmart Product Codes can vary in format, but they generally consist of numeric or alphanumeric sequences designed to uniquely identify each product within Walmart’s system.

Purpose

Product Organization: Walmart Product Codes help in organizing products, managing stock, and facilitating efficient tracking of inventory. They ensure that each item is accurately categorized and easily accessible.

Search and Listing: These codes are used by Walmart’s internal systems and can also be used by sellers to list products on Walmart’s platform. They help in maintaining order and accuracy in Walmart’s product database.

Usage

Sellers: To add products to Walmart’s catalog or manage existing listings, sellers use Walmart Product Codes to ensure their items are correctly integrated into Walmart’s inventory system.

Consumers: For shoppers, these codes enable a streamlined search experience, helping them find specific products quickly within Walmart’s vast selection.

How to Extract ASIN, UPC and Walmart Product Codes?

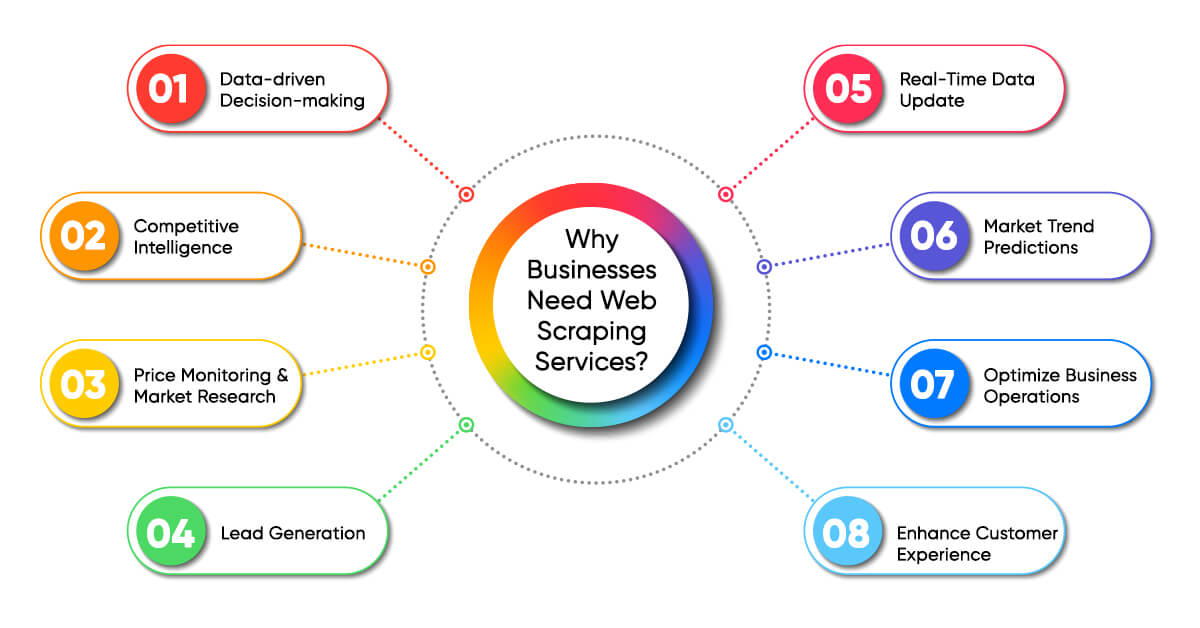

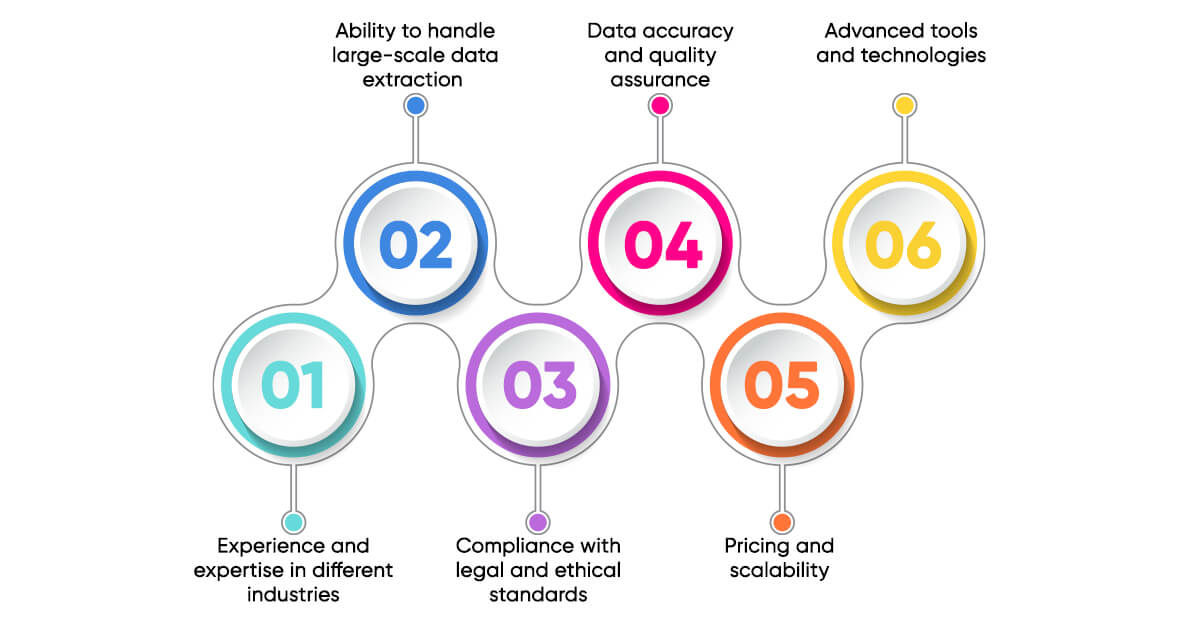

Web scraping is especially advantageous if one is dealing with many products at a time or require periodical update of product data. There are different opportunities to adjust different tools that would allow to perform this work systematically and properly.

How to Extract ASIN Information?

The need to extract the ASIN (Amazon Standard Identification Number) is crucial for any business or individual that requires compiling and organizing product information from Amazon. The process can be simplified into a few easy steps:The process can be simplified into a few easy steps:

Go to the Product Page on Amazon

First, go to the page where the specific product you are interested in it is available. Amazon as an online selling platform provides millions of products in its catalog, and it can be a good reference source for finding product information, including the ASIN.

The ASIN can be found in the Product Details Section

On the same page, go down or look for the information tab, labeled “Product Details”. Here there is normally identification number referred to as ASIN usually provided. The ASIN refers to the identification code for Amazon products, which may be labeled as ASIN or Amazon Standard Identification Number.

Two Ways to Extract the ASIN

Manual Method: You can just copy it from the product page along with ASIN. What is good for one or two products is not very convenient when handling data in large quantities

Web Scraping: For large operations, there are available web scraping applications. These tools can save much time to read through the pages to collect ASINs and other product details at a time.

How to Extract UPC Information

Extracting UPC (Universal Product Code) information is essential for various business and inventory management tasks. Here’s how to retrieve UPC information using different methods:

Check the Product Packaging

In the case of material goods, the UPC is normally in the form of bar code that is attached to the container of the product. This is composed of digits and lines on the code, and assigns a distinct number for the product. All you need to do is take a look at the packaging to identify and write down the listed UPC.

Barcode Scanner Application

You can also any access a smartphone and bury scan the barcode. You can get many mobile phones that have their inbuilt application to scan barcodes or have a barcode scanner application. Click on the application to open it, aim the smartphone’s camera at the barcode and allow the application to scan the code. The UPC will be displayed in a simple typographical format for your convenience to jot down or make references wherever or whenever necessary.

Retrieve Through an API or Web Scraping

For larger inventories or e-commerce data, using APIs or web scraping can be more efficient. Some platforms provide APIs, such as Walmart’s UPC lookup, that let you automatically access UPC data for multiple products. This is ideal for managing bulk data.

Alternatively, web scraping can extract UPC information from websites when APIs aren’t available. Tools can be programmed to scan web pages, find UPCs, and organize them in a structured format, making it useful for extensive product data extraction.

Choosing the Right Method

Manual extraction from packaging works well for small operations. Barcode scanner apps are convenient for scanning individual products. APIs and web scraping are ideal in large inventories since they are automated.

Listed methods have their own benefits and it is up to you to decide which one you will use. Getting UPC data is more essential in tracking inventory, setting prices, and identification of products, especially when dealing with large sites like Walmart.

How to Extract Walmart Product Codes?

To extract Walmart product codes, follow these steps using various methods:

Visit the Product Page on Walmart's Website

Start by going to the specific product page on Walmart’s official site. Walmart offers a vast catalog of products, making it an excellent resource for product data.

Location of Product Code is in ‘Product Details’

After that, you are a product page, move to the end of the page and look for the “Product Details” tab. Here, most of the time, you’ll be able to identify the Product Identification Number UPC, ASIN, or Walmart item number. The code may be named as “Product Code”, “Item Number” or “Universal Product Code”.

Choose Your Extraction Method

Manual Extraction: For small operations you can hand write the product codes from the product pages. This is okay when handling few products but cloudy when handling big projects which will take a lot of time.

Web Scraping Tools: Web scraping is useful if you need large amounts of product codes at once or on a recurring basis. These tools or scripts can be set up to automatically extract product data from multiple pages, saving time and effort.

API Access: If Walmart offers an API for product data, you can retrieve product codes programmatically. This is ideal for businesses managing large inventories or e-commerce operations, allowing for seamless access to product details.

Challenges of Scraping UPC, ASIN, and Walmart Product Codes

Components:

Manufacturer Code (Prefix): The first 6 digits are assigned to the manufacturer or brand. This code identifies the company that produces the product.

Product Code: The next 5 digits identify the specific product made by the manufacturer. This helps distinguish between different products made by the same manufacturer.

Check Digit: The final digit is a check digit used to validate the accuracy of the UPC. It ensures that the code has been scanned correctly and that there are no errors.

Representation: The UPC is often displayed as a series of black bars and white spaces on product packaging, which can be scanned using a barcode reader.

Purpose

Inventory Management: UPCs help businesses keep track of stock levels, streamline reordering processes, and manage inventory more effectively.

Sales Processing: During checkout, UPCs are scanned to quickly retrieve product information and prices, speeding up the transaction process and reducing errors.

Product Identification: UPCs ensure that the correct product is being sold and purchased, which helps in managing product data across different retailers.

Usage

Retail Environment: UPCs are used in physical stores to track products and manage sales. They are scanned at checkout counters, which helps in updating inventory and processing transactions.

Online Retail: UPCs are also used by online retailers to identify products and manage listings. They help in maintaining consistency and accuracy in product information.